MyCache : Distributed caching engine for Asp.net web farm. Part II : The Internal Details

Introduction

If you are reading this article, you may already have gone through the demonstration of MyCache in the following article:

MyCache : Distributed caching engine for Asp.net web farm. Part I : The Demonstration.

Well, if not, you are suggested to go through the above article to have a look at the usage, capability and high level architecture of the distributed caching engine.

This

is part-II of the article, which would cover the detailed explanations

of the building block of the caching engine, performance and other

issues.

Here we go:

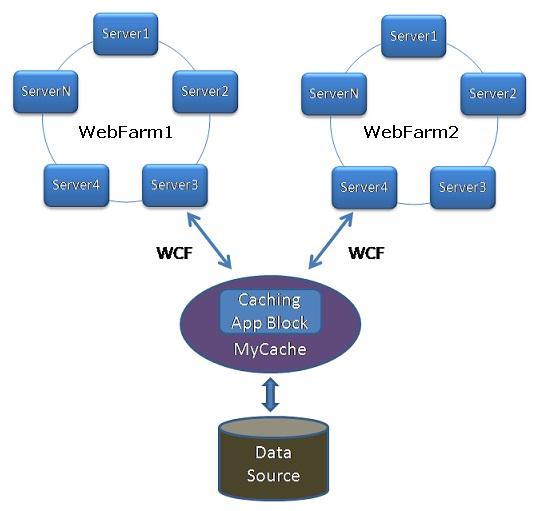

MyCache architecture

As

you may already know, MyCache is entirely built upon leveraging the

very familiar .NET related proven technology. Following are the two main

building blocks of MyCache:

Microsoft Caching Application Block : This have been used as the core caching engine for storing/retrieving objects to/from in-memory. A class library have been built around the Caching Application block to implement the basic caching functionality.

WCF with net.tcp binding : WCF

have been used as the communication media between the caching service

and the client applications. The WCF service uses a net.tcp binding to

let the communication take place in the fastest possible time.

IIS 7 : The

WCF service with net.tcp binding is hosted under an Asp.net application

in IIS to let the service exposed to the outside world.

To recall, following diagram depicts the high level architecture of MyCache:

Figure : MyCache Architecture

And, following is the basic working principle of MyCache based caching management:

There is a Cache server which is used by all servers in the web farm to store and retrieve cached data.

While there is a Request in a particular server in the load-balanced web farm, the server asks the Cache server to get the data.

Cache server serves the data from the Cache if it is available, or, lets the calling Asp.net application know about the absence of the data. In that case, the application at the calling server loads data from the data storage/service, stores it inside the Cache server and processes output to client.

A subsequent request arrives at a different server, which needs the same data. So, it asks the Cache server to get the data.

Cache server has that particular data in it’s Cache now. So, it serves the data.

For any subsequent request to any server in the web farm requiring the same data, the data is served fast from the Cache server, and the ultimate performance makes everybody happy.

A few technical issues

Despite

the fact that there are a few well-established distributed caching

engine like NCache, MemCache, Velocity and a few others, I decided to

develop MyCache.

But, why another one?

Fair question. To me, the answer is as follows:

MyCache

is simple and open source. It uses technology which are very basic,

stable and known by most .NET developers.The overall implementation is

very familiar, and, very easy for someone to customize according to

need. So, if you use MyCache, you won’t get a feeling that you are using

a 3rd party service or component or product which you don’t have much

control on, or, which you are not sure about. MyCache is not really a

“Product”, rather, an implementation of a simple idea which lets you

build your own home made distributed caching engine.

Why choosing Caching Application Block as the core caching engine?

I could have tried to develop a caching engine from scratch for storing and retrieving objects to and from in-memory, but, this would require me spending much time developing something which is already developed, well tested and well accepted across the community. So, my obvious choice was to use the “Caching Application Block” as the “in-memory” caching engine.

Why choosing WCF with net.tcp binding in IIS?

The

Caching service is build around the Caching Application block, and, the

service had to be exposed to the Asp.net client applications to let

them consume. Following options were available:

- Socket programming

- NET remoting

- Web Service

- WCF

The Socket programming or .NET remoting was the fastest possible way to let the client applications consume the Caching service. But, these two were not chosen simply because they would require too much effort to implement a solid communication mechanism, which is already available in WCF, via different binding options.

The

Web service (Equivalent to basicHttpBinding or wsHttpBinding in WCF)

was the easiest way to expose and consume the caching service, but, it

was not chosen simply because the SOAP based communication over HTTP

protocol is the slowest among all WCF binding options.

So,

WCF with net.tcp binding was the obvious choice to expose and consume

the service in the fastest possible way and in the most reliable manner.

Even though there was an alternative to host the WCF Service via a

windows service, the IIS based hosting of the WCF Service with net.tcp

binding were chosen because this would allow to let everything managed

from within the “IIS”, (Though, only IIS 7.0 and later versions support

net.tcp binding).

OK, I want to use MyCache. What do I have to do?

Thanks if you want to do so. Before deciding to use MyCache, you may need to know a few facts:

MyCache

is built upon .NET framkework 4.0, requires IIS 7 or heigher on Windows

Vista, Windows 7 or Windows 2008 machine. So, if you think your

deployment environment meets the above criteria, you need to perform the

followings steps to use MyCache:

Deploy MyCache WCF Service on IIS with enabling

net.tcpbinding. (The previous article covers this in detail).Add a reference to the

MyCacheAPI.dll in your Asp.net client application(s), and, configure your application’s identity (Configure a “WebFarmId” variable in AppSettings).Add a reference to the WCF Service (Caching Service) and configure the service in

web.configif required (Optional).Start using

MyCache(You already know how to do this. See in thePart1of the article)

MyCacheAPI:The only API you need to know

Yes.

Once the Caching service is configured properly in IIS, the only thing

you need to know is MyCacheAPI. Simply adding the MyCacheAPI.dll would

let you start using the caching service straight away.

Following are the simplest codes to use MyCache inside your Asp.net applications:

//Declare an instance of MyCache

MyCache cache = new MyCache();if(data == null)

{

//Data is not available in Cache. So, retrieve it from Data source

data = GetDataFromSystem();

//Store data inside MyCache

cache.Add("Key", data);

}//Remove data from Cache

cache.Remove("Key");

//Add data to MyCache with specifying a FileDependency

cache.Add("Key", Value, dependencyFilePath, Cache.NoAbsoluteExpiration, new TimeSpan(0, 5, 10), CacheItemPriority.Normal, new CacheItemRemovedCallback(onRemoveCallback));

//Reload the data from dependency file and put into MyCache in the callback

protected void onRemoveCallback(string Key, object Value, CacheItemRemovedReason reason)

{

if (cache == null)

{

cache = new MyCache();

}

if (reason == CacheItemRemovedReason.DependencyChanged)

{

//Aquire lock on MyCache service for the Key and proceed only if

//no lock is currently set for this Key. This has been done to prevent

//multiple load-balanced web application update the same data on MyCache service

//sumultaneously when the underlying file content is modified

if (cache.SetLock(Key))

{

string dependencyFilePath = GetDependencyFilePath();

object modifiedValue = GetObjectFromFile(dependencyFilePath);

cache.Add(Key, modifiedValue, dependencyFilePath, Cache.NoAbsoluteExpiration, new TimeSpan(0, 5, 60), CacheItemPriority.Normal, new CacheItemRemovedCallback(onRemoveCallback));

//Release lock when done

cache.ReleaseLock(Key);

}

}

}

Serving multiple load-balanced Web Farms

MyCache

is able to serve multiple load-balanced web farms, and, objects stored

from the web application(s) of one web farm is not accessible to the web

applications deployed in a different web farm. So, how MyCache manages

this?

Interestingly,

this was too simple to implement. I just had to distinguish each web

farm by a WebFarmId, which was needed to be configured in the

web.config of each different application, by configuring a different

value for each web.config.

So,

let us assume we have two different kinds of Asp.net code bases (Two

different Asp.net applications), and each one is deployed in it’s own

load-balanced web farm in IIS. These two applications are configured to

use MyCache (By Adding Service references to MyCache WCF service and

adding reference to MyCacheAPI.dll).

As

we do not want one application not to be able to get access to another

application’s data in MyCache, we need to configure the WebFarmId

parameter as follows:

In web.config of Application1 : <add Key=”WebFarmId” Value=”1”/>

In web.config of Application2 : <add Key=”WebFarmId” Value=”2”/>

Now,

whenever a Key is being provided by the application to store/retrieve

to/from MyCache, the MyCacheAPI appends the WebFarmId with the Key

before invoking the MyCache service methods.

Following

method is used to append the WebFarmId value with the Key, which is

used my MyCacheAPI.dll to build an appropriate Key for the corresponding

web farm, before invoking any service method:

public string BuildKey(string Key)

{

string WebFarmId = ConfigurationManager.AppSettings["WebFarmId"];

Key = WebFarmId == null ? Key : string.Format("{0}_{1}", WebFarmId, Key);

return Key;

}

cache.Add(Key, Value);

This

adds an object to the caching server using the provided Key, and,

before adding the object to the MyCache service, it builds the

appropriate Key for distinguishing the Web farm. Following is the

function definition:

public void Add(string Key, object Value) { //Builds appripriate key for corresponding web farm Key = BuildKey(Key); cacheService.Add(Key, Value); }

Implementation of FileDependency in MyCache

Asp.net

Cache has a cool “FileDependency” feature, which lets you add an object

into the cache and specify a “File dependency” so that, when the file

content is modified in the file system, the callback method is invoked

automatically, and you can reload the object into the cache (Possibly,

re-reading the content from the file) within the callback method.

There

was a potential approach to implement this feature in MyCache. The

approach was to use FileDependency of Caching Application Block in

MyCache Service. But, this approach wasn’t successful because of the

following reason:

Nature of the “Duplex” communication of WCF

Yes,

it was the “Duplex” communication nature of WCF, which didn’t let

us implement the FileDependency feature in an ideal and cleaner

approach. The following sections have detailed explanations on this

issue.

But,

before that, we have a pre-condition. In order to be able to use the

FileDependency feature in MyCache, the caching service is needed to be

deployed within the same Local Area Network where the Asp.net client

applications are deployed. The following section explains this issue in

detail:

The dependency file access requirement

Like

Asp.net Cache, the Microsoft Caching Application block also have

CacheDependency feature. So, when the Asp.net client application needs

to add an object into MyCache along with specifying a FileDependency, it

is possible to send the necessary parameters to MyCache WCF Service abd

specify a FileDependency while adding an object into the Caching

Application Block within MyCache. But, there is a fundamental File

access issue.

MyCache

is a distributed caching service, and, as long as client Asp.net

applications can consume the caching service at a particular end point

(URL), the caching service could be hosted on any machine on any

network. But, in order to implement the FileDependency, it is required

that, the Caching service be deployed on the same Local Area Network

where both the Asp.net client applications and the Caching server

application has access to a network file location.

To

understand this better, let us assume we have a web farm where a single

Asp.net application have been deployed in a multiple loadbalanced web servers.

All these web servers point to the same codebase, and, they all resides

in the same local area network. As a result, they can access a file

stored somewhere in the Local Area Network using a UNC Path (Say,

\\Network1\Files\\Cache\Data.xml).

Now,

no matter where the MyCache WCF service is deployed, it is necessary

that, the server application has access to the same file

(\\Network1\Files\\Cache\Data.xml) stored in the same LAN where the

LoadBalanced servers have access. This will allow to detect a change in

the underlying file for the MyCache service application.

The WCF "Duplex" communication issue

The Caching Service application shouldn’t implement any logic which should belong to the client Asp.net applications. So, the responsibility of reading the dependency file and storing into the Caching service actually belongs to the corresponding client application, and, the Caching service application should only worry about how to load and store data into the Caching engine (Caching Application block).

So,

assuming that the dependency file is stored in a common network

location which are accessible both by the Caching Service and Asp.net

client applications on LoadBalanced servers, the client application

should only send the file location and the necessary parameters to the

server method indicating that, FileDependency should be specified while

adding the object in Cache. On the other hand, the Caching Service

should add the object in the Caching Application block by specifying the

CacheDependency (To the specified file location), and, when the file

content is modified, instead of reloading the file content from disk,

the caching application block should fire a callback to the

corresponding client Asp.net application to re-read the file content and

store the updated data into cache.

It seemed promising that WCF supports DUPLEX communication where not only the client can invoke server functionality, but, the server is also able to invoke a functionality on client application. But unfortunately, this is only possible (Or, feasible) as long as the client has a “Live” communication status with the server application.

In our case, the flow of information should happen as follows:

The client application invokes WCF method on caching service by sending necessary parameters to add the object in Cache with

CacheDependency

The WCF Service should add the object into Caching Application Block with specifying

FileDependencyand return (Client-server conversation stops here and it is no longer live).

The underlying file content is modified somehow (Either manually, or by an external application).

Microsoft Caching Application Block at the server-end detects that change, and invokes the callback method at the Server-end, which in turn tries to invoke the callback at the client-end.

Unfortunately, at this point, the Client Asp.net application has no longer a “Live” communication channel with the WCF Service, and hence, the client callback method invocation fails. Ultimately, the client Asp.net application gets no signal about the modification fo the file from the Server-end, and hence, it cannot reload the file content and store into MyCache.

So, how CacheDependency was implemented then?

It was implemented with a very simple approach. I just took the help of Asp.net Cache. Yes, you heard it right!

Even

though we are using MyCache for our distributed cache management needs,

we shouldn’t forget that, our old good friend Asp.net Cache is still

available there with the Asp.net client applications. So, we could

easily use it inside the MyCacheAPI.dll only to get notification about the event when the file

content is modified at client-end (Because, the Server application was

unable to send us such a signal). Once we get the event notification at

client Asp.net applications, it is easy to re-read the file content and

update the data in MyCache.

Here is how the Asp.net Cache was used in conjunction with the MyCache WCF Service to implement the CacheDependency feature.

The object is added to the

MyCachecaching service with the usualcache.Add()method, along with specifying theFileDependency.

cache.Add("Key", Value, dependencyFilePath, Cache.NoAbsoluteExpiration, new TimeSpan(0, 5, 10), CacheItemPriority.Normal, new CacheItemRemovedCallback(onRemoveCallback));

The

MyCachserver application adds the object to the Caching Application Block, along with specifying theCacheDependencyfile location. At the same time, theMyCacheAPI.dlladds the Key to the Asp.net Cache (both as a key and a value) along with specifying theFileDependency, and, a callback method.

//Set the Key (Both as a Key and a Value) in Asp.net Cache with specifying FileDependency and CallBack method to get event notification when the underlying //file content is modified cache.Add(Key, Key, dependency, absoluteExpiration, slidingExpiration, priority, onRemoveCallback); cacheService.Insert(Key, objValue, dependencyFilePath, strPriority, absoluteExpiration, slidingExpiration);

At MyCache Service-end when the file content is modified, the Microsoft Caching Application block removes the object from its cache, but, doesn't call any callback method as no callback method is specified.

At the same time, at Asp.net client-end the callback method is invoked by the Asp.net Cache, in all loadbalanced sites. Each site tries to obtain a lock for the Key and one site gets the lock and updates the object value in

MyCacheService (While other sites fails to obtain the lock and hence doesn’t proceed the unnecessary update operation of the same object inMyCache(Which is already updated by one of the LoadBalanced applications).

if (cache.SetLock(Key)) { string dependencyFilePath = GetDependencyFilePath(); object modifiedValue = GetObjectFromFile(dependencyFilePath); cache.Add(Key, modifiedValue, dependencyFilePath, Cache.NoAbsoluteExpiration, new TimeSpan(0, 5, 60), CacheItemPriority.Normal, new CacheItemRemovedCallback(onRemoveCallback)); //Release lock when done cache.ReleaseLock(Key); }

Locking and Unlocking

The

distributed caching service is used by the load-balanced Asp.net client

sites, and, multiple sites may try to update and read the same data (Within the

same Web farm) on MyCache service at the same time. So, it is important

to maintain consistency of data in update operations so that,

An Update operation (

cache.Add(Key,Value)) for a particular Key does not overwrite another ongoing (Not finished) update operation for the same Key.

A Read operation (

cache.Get(Key)) for a particular Key does not read a data in a“Dirty state”(Read operation does not read data before finishing a current update operation for the same Key).

An Update operation on CacheService from within the

CacheItemRemovedCallBackdoes not get called multiple times for each LoadBalanced application.

Fortunately, each and every operation on the Microsoft Caching Application Block is "Thread Safe". That means, as long as a particular Thread doesn't complete it's operation, no other Thread will be allowed to accessed the shared operation, and hence, there will not be any occurrance of "Dirty Read" or "Dirty Write".

However, there was a need to implement some kind of locking to prevent the CacheItemRemovedCallBack method updating the same data multiple times (When the underlying file content is being modified) on Caching service for each LoadBalanced application.

LockManager was born. What is LockManager?

LockManager

is a class which encapsulates the locking/unlocking logic for MyCache

Service. Basically, this class constitutes a “Lock Key” for a particular

key and puts into the Cache (By using the locking key both as the key

and the value) to indicate that, MyCache is currently locked for that

particular Key.

For example, let us assume current Key is : 1_Key1 (WebFarmId_Key)

So,

as long as the Microsoft Caching Application Block has the key

“1_Key1” available in it’s cache, it is assumed that, a lock is available on MyCache for the particular data with key 1_Key1

When the underlying file content is modified, the CacheItemRemovedCallBack method is fired on each LoadBalanced application, and each application tries to obtain a lock on the specified key. The first application which obtains the lock for that particular key gets the opportunity to update the data on Caching Service, and the other application simply doesn't do anything.

Such "Key based" individual locking ensures that, locking occurs at each individual object's operation level, and hence one locking for a particular object doesn't effect another. Ultimately, this reduces the chance of building up a long waiting queue of Read/Write operation on MyCache and thus improves overall performance.

Please

note that, following two methods are available on MyCacheAPI, which are

meant only to be used inside the CacheItemRemovedCallBack method for at

the Asp.net applications(For ensuring that, only one loadbalanced

application updates the data on MyCache server by setting a lock with

the Key).

SetLock(string Key) : Obtains an update lock for the Key on MyCache

ReleaseLock(string Key) : Releases the update lock

So, in normal Read and Write operation on MyCache (Except the CacheItemRemovedCallBack method), the client codes doesn't need to write any locking functionality, as the locking logic is implemented on the MyCache service-end.

The LockManager class is defined as follows:

/// <summary>

/// Manages locking functionality

/// </summary>

class LockManager

{

ICacheManager cacheManager;

public LockManager(ICacheManager cacheManager)

{

this.cacheManager = cacheManager;

}

/// <summary>

/// Releases lock for the speficied Key

/// </summary>

/// <param name="Key"></param>

public void ReleaseLock(string Key)

{

Key = BuildKeyForLock(Key);

if (cacheManager.Contains(Key))

{

cacheManager.Remove(Key);

}

}

/// <summary>

/// Obtains lock for the specified Key

/// </summary>

/// <param name="Key"></param>

/// <returns></returns>

public bool SetLock(string Key)

{

Key = BuildKeyForLock(Key);

if (cacheManager.Contains(Key)) return false;

cacheManager.Add(Key, Key);

return true;

}

/// <summary>

/// Builds Key for locking an object in Cache

/// </summary>

/// <param name="Key"></param>

/// <returns></returns>

private string BuildKeyForLock(string Key)

{

Key = string.Format("Lock_{0}", Key);

return Key;

}

}Performance

MyCache

manages cached data inside a different process, possibly on a different

machine. So, it is obvious that, the performance is nowhere near the

Asp.net Cache, which stores data “in-memory”.

Given

the distributed Cache Management requirement, the “out of the process”

storage of cached data is a natural demand, and hence, the Inter-process

communication (or, Inter-machine network communication) and Data

serialization/de-serialization overheads cannot just be avoided. So, its

necessary that, the communcation and serialization/de-serialization

overhead is minimum.

The

net.tcp binding is the fastest possible way of communication mechanism

within WCF across two different machines, which have been used in

MyCache architecture. Besides, the WCF Service and client applications

could always be configured to take the most possibile performance out of

the system.

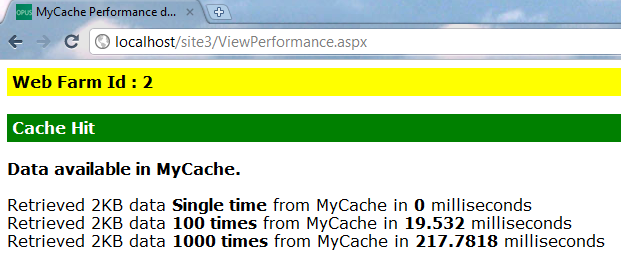

I

have developed a simple page (ViewPerformance.aspx) to demonstrate the performance of MyCache

in my Core-2 Duo 3 GB Windows Vista Premium PC. The client and Server

components both are deployed within the same machine and here is a

sample performance output:

Figure : A sample performance measurement of MyCache

Despite

the fact that the testing environment isn’t convincing in any way (No

real server environment, no real load on system, everything in same single PC), but still, the above

data signifies that the overall performance is promising enough to be

considered as a distributed caching engine. After all, If retrieval

operation from MyCache for a moderate sized data completes within at most 1

second in a real application, I would be confident to use it as my next

distributed Caching engine.

Give it a try, and let me know about any issues, or, improvement suggestions. I’d be glad to hear from you.